Overview

Aegiq is excited to announce two papers that describe our radical approach to addressing fundamental challenges to delivering scalable photonic quantum computers. We use photons as our qubits and perform quantum computation. In these papers, we outline key aspects of our architecture that demonstrate a new and world-leading approach to building fault-tolerant quantum computers that will scale beyond 1 million useful qubits.

- “Generating redundantly encoded resource states for photonic quantum computing” describes a novel protocol compatible with our world leading quantum dots to overcome the limitations of probabilistic two qubit gates in photonics, enabling the building blocks for a fundamentally new approach to addressing scalability challenges in quantum computing.

- “QGATE (Quantum Gate Architecture via Teleportation & Entanglement)” increases performance by (a) reducing the number of operations the quantum computer needs to perform, (b) reducing compile time for large quantum operations (an often overlooked and exponential scaling problem that can negate any advantage from quantum hardware) to a linear scaling problem, and (c) achieving up to 26% error correction thresholds to photon loss in the system.

Building quantum computers that scale to commercial utility, which are performant, cost effective and can integrate into existing compute environments requires: (i) scaling-up quantum computing chip performance and (ii) scaling-out by quantum interconnects between chips and modules. Aegiq uses standard telecom volume supply chain and optical fibre to do both.

At the foundation of our approach are our world-leading semiconductor quantum dots operated as quantum light sources. We use them to create the entangled resource states – the building blocks for our architecture.

Building on our sources of near-ideal photons, QGATE enabled by Aegiq’s Deterministic Resource State Generation offers a larger gate set than the standard gate set of other modalities, with a vastly smaller footprint than other photonic approaches. Many simple gates map directly to the underlying hardware. Complex gates can be decomposed into a small number of operations (in certain cases exponentially fewer than other architectures and modalities) then realise these operations on our underlying photonic quantum hardware.

The Pre-compute and Compilation Scalability Challenge Facing Legacy Modalities

In a typical quantum computing process, there are a number of steps that need to be performed:

- Define the problem to be solved in some high level form consisting of large many body Hamiltonians or arbitrary operators;

- Convert this to a quantum circuit – typically a sequence of unitary operations, built from a universal gate set (e.g., single-qubit rotations and two-qubit entangling gates like CNOT). At this stage, the circuit is hardware-agnostic and focuses on logical operations;

- This quantum circuit is passed to a quantum compiler which transforms the abstract algorithm into a low-level, hardware-executable circuit. This involves several key steps to ensure the circuit is optimized, fault-tolerant, and compatible with the limited types of gates which can be implemented in the underlying physical quantum hardware:

- Gate synthesis decomposes high-level unitary operations into sequences of gates from the native gate set of the target hardware. This step is computationally expensive — especially for arbitrary unitaries used in algorithms like Quantum Phase Estimation (QPE), common in simulations of quantum systems in materials science, chemistry, and fluid dynamics. The complexity of this decomposition often scales exponentially with the number of qubits and desired precision.

- Qubit mapping of logical qubits to physical qubits on the device. With superconducting qubits or trapped ions, where qubits are arranged in a grid or line, only adjacent qubits can interact directly. To perform operations between distant qubits, one needs to insert SWAP gates to enable interaction between non-adjacent qubits. This routing introduces significant overhead, as each SWAP gate requires multiple CNOT gates, and can quickly become a major blocker to scalability.

For complex quantum algorithms the potential for the precompute and compilation steps to scale exponentially can negate any benefits from a quantum computer, as well as resulting in a scale of physical implementations that are not achievable.

Aegiq MBQC Approach with Deterministic Resource States

Aegiq employs a Measurement Based Quantum Computing (MBQC) architecture for our modular photonic quantum computer. Rather than applying sequences of gates to static qubits, MBQC begins with creating a large entangled multi-photon state (cluster state) and performs computation and error-correction entirely though measurements of single photons.

We supercharge MBQC by adding deterministic resource state generation based on our quantum dot technology. This provides immediate benefits:

- photons can be entangled and measured flexibly without the spatial constraints of solid-state qubits.

- we can deterministically generate resource states. Unlike probabilistic photonic systems using, e.g., spontaneous parametric down-conversion, the entangled resource state is produced every time the system is triggered with no need to wait for success or repeat until success.

Deterministic generation of resource states solves the first scaling bottleneck in photonic MBQC. Reliable and on demand creation of entangled photons massively reduces the number of photonic components required to create basic resource states, which can otherwise scale exponentially.

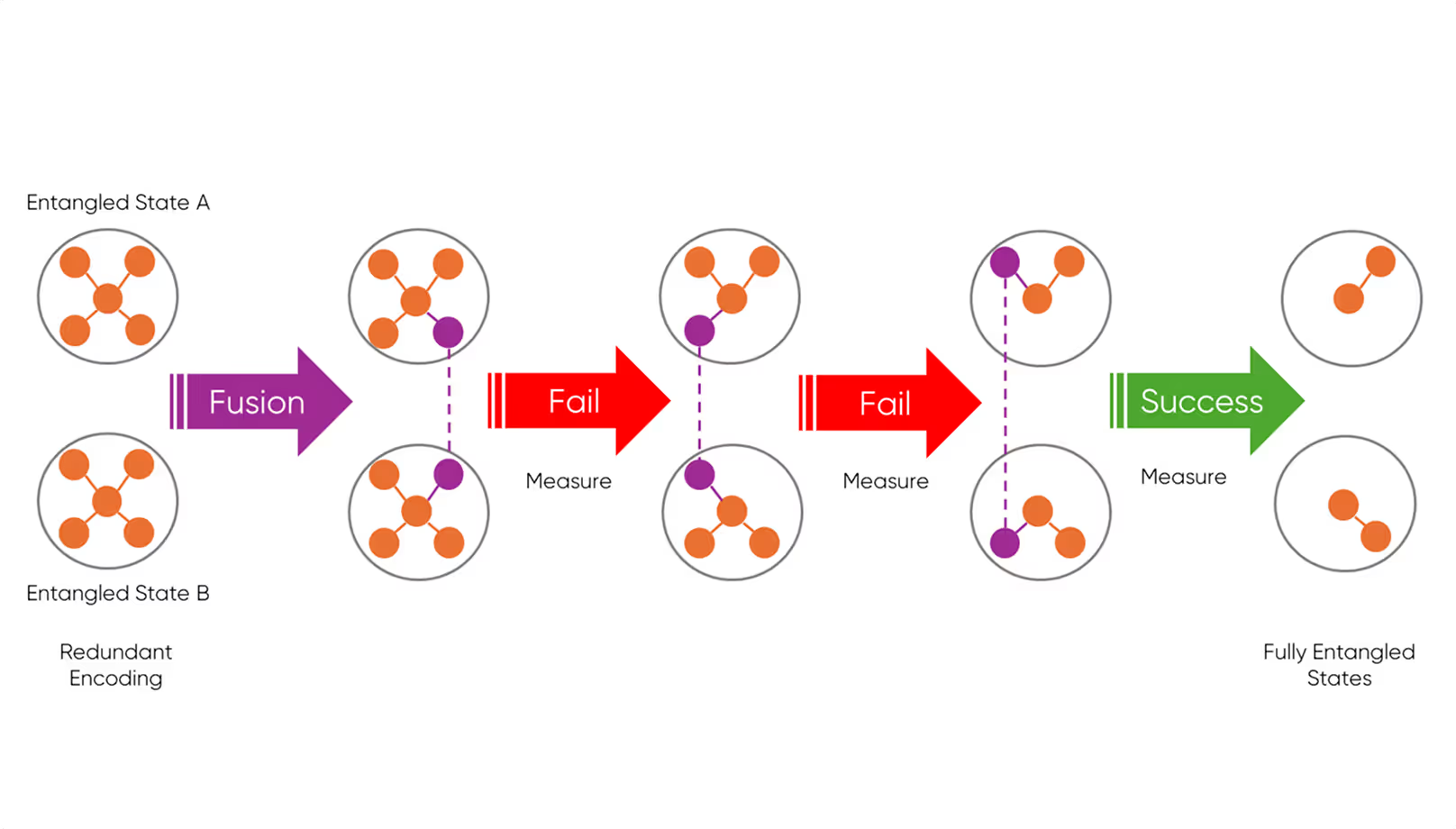

To construct large photonic cluster states for MBQC, small resource states need to be ‘fused’ together. This can be performed using Type-II Fusion however, this is a probabilistic process - one photon is selected from each of two entangled photonic states and measured together, regardless of whether entanglement is successful or not – resulting in a 50% success probability. Hence, creating the second scaling bottleneck in photonic MBQC.

The paper "Generating redundantly encoded resource states for photonic quantum computing” proposes a practical protocol for solving this scaling problem, through a novel fusion protocol.

The protocol employs redundant encoding with entangled states (multiple qubits in highly entangled states) that act as ancilla-like (helper) photonic qubits. In this way we can repeat the fusion operation, with a route to reaching close to 100% success rates. This enables us to target building logical qubits with fewer physical qubits with a path to reduce to only 256 physical qubits per logical qubit with logical error rates <10-8.

QGATE

QGATE builds on Aegiq’s quantum dots and the ability to construct large cluster states together with the novel approach to fusion described above to create a blueprint for quantum computers to scale to commercial utility by:

- Significantly reducing component count and physical size to make on-prem, rack deployment a reality.

- Reducing compilation time by up to several orders of magnitude, which can otherwise negate any benefit from quantum hardware.

- Improving the thresholds for photonic loss, bringing the era of photonic quantum error correction into the near term.

The QATE paper demonstrates a unique approach to achieve arbitrary qubit connectivity - allowing to entangle or apply gates between any pair (or group) of qubits regardless of their physical location or layout in the hardware. Any qubit can interact with any other qubit directly, with no need for intermediate gates or routing.

Rather than applying complex non-Clifford gates directly to a qubit, which is extremely hard in fault-tolerant quantum computing, QGATE uses gate teleportation, using entanglement and measurement to apply the gate indirectly, via gate-ancillas (helper qubits), vastly reducing the complexity and making the quantum computation highly parallelizable and error-resistant.

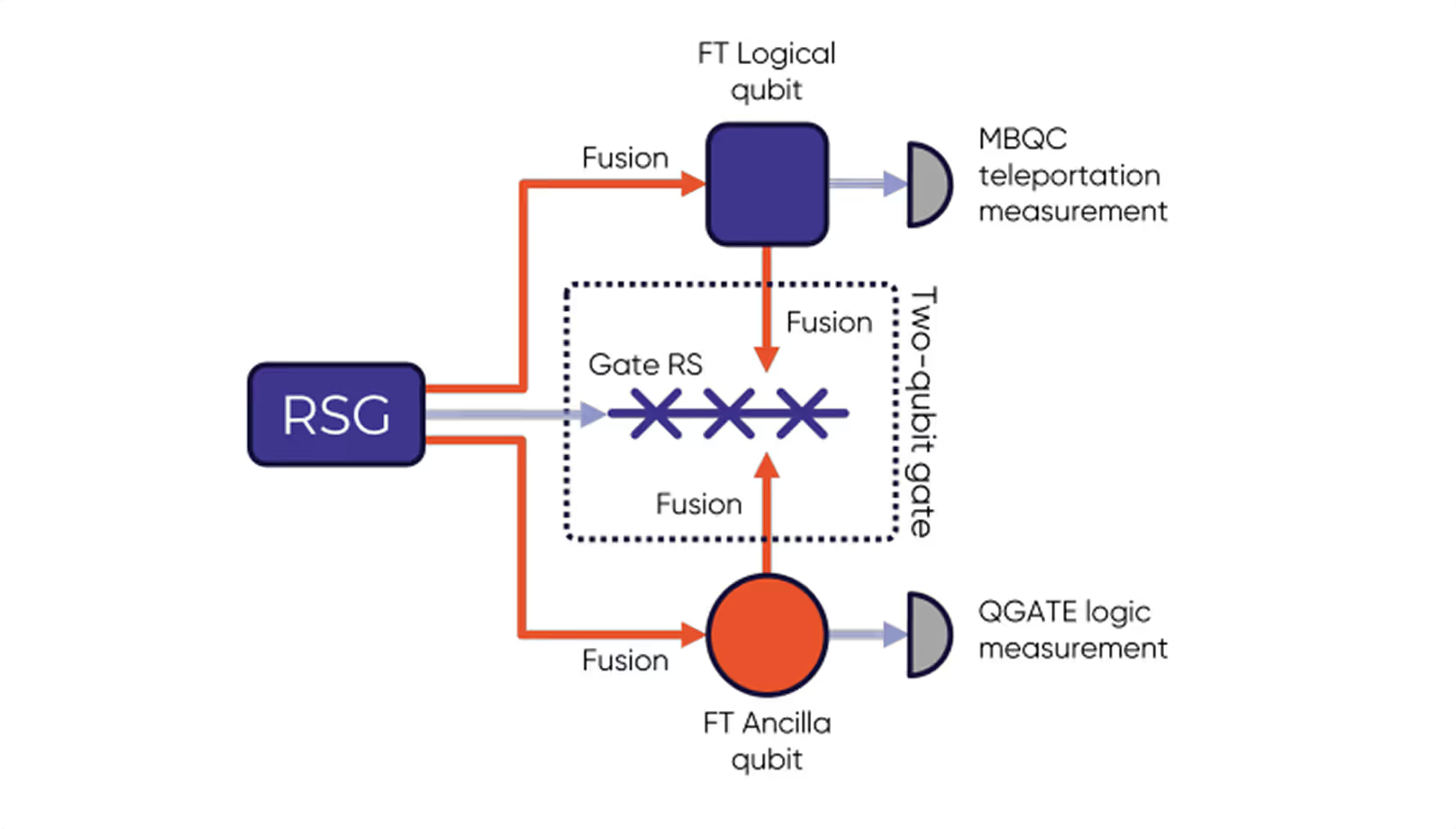

Figure 2 shows how this is achieved. The computation process starts with resource state generators (RSGs) made up of arrays of deterministic photon emitters (quantum dots) – providing entangled sets of photons, which serve as building blocks for computation. These are then entangled via fusion to generate three sets of resource states:

- fault-tolerant logical qubits

- ancilla qubits

- two-qubit-gates, using the additional resource states and fusion measurements to entangle logical qubits with QGATE ancilla qubits.

The entanglement of logical and ancilla qubits is crucial for applying multi-qubit operations. Single-qubit measurements are performed on the ancilla qubits. These measurements, combined with prior entanglement, apply quantum gates to the logical qubits. This is a measurement-based approach, meaning computation is driven by how and when qubits are measured.

QGATE logical qubits are constructed using foliated quantum error correction codes, which are well-suited to photonic MBQC. Measurement and entanglement as described above are also used to teleport the logical qubit states through layers of the foliated error correction codes. This allows the logical qubit to evolve and move through the computation while remaining protected from errors. QGATE can perform these operations fault-tolerantly, without needing to decode or re-encode the logical qubits, with high photon loss thresholds of up to 26%. This is significantly above numbers reported to date and important since a higher threshold leads to better error-resistance of logical qubits.

QGATE enables direct implementation of arbitrary multi-qubit unitaries without decomposition into a finite gate set. This reduces compile time from an exponential to linear scaling, while reducing the physical components to a size realisable in standard data centre racks. This is critical in overcoming the barrier to scale, enabling a quantum computer capable of executing complex quantum algorithms used in chemistry, material simulation, CFD, etc., which require multi-qubit entanglement or sparse Hamiltonians.

Using QGATE, the typical steps in the quantum process can be massively simplified and reduced:

.avif)

With QGATE, we improve quantum compute runtimes, reduce compile time from an exponentially scaling problem to one that scales linearly, and show the path to improved quantum error correction thresholds for photonic loss. Along with our deterministic photon sources, which can reduce the number of physical components required by several orders of magnitude, we are breaking through the key barriers to scaling quantum computing.